This is a #Hacktoberfest training git repo on GitHub. It contains resources for learning Git. 99% of the contributions in this repository are from different first time beginners just like you. So, jump right in! 🎯

Meetup Event sponsorship DigitalOcean →

Hacktoberfest is a month-long celebration of open source software run by DigitalOcean in partnership with GitHub and Dev.

- Hacktoberfest is open to everyone in our global community!

- Four quality pull requests must be submitted to public GitHub repositories.

- You can sign up anytime between October 1 and October 31.

Listing a few git resources here for everyone.

- An interactive basic tutorial to Git: try.github.io

- A very light, but not gentle 👊, guide to Git: git – the simple guide

- Novice 🤓 to Ninja ⚔, a complete guide on becoming a Git Guru

- Vogella Git Tutorial

- The (Written) Unwritten Guide to Pull Requests by Blake Riosa

There are tons of learning material on the Web

- Try Git — learn Git in 15 minutes with pseudo-terminal interface

- How to Create a Pull Request — learn to create a GitHub Pull Request

- GitHub Learning Paths — learn about Git, Setup, GitHub, and a lot more

- Atlassian Git Tutorial — comprehensive tutorial on Git

- Use gitk to understand git — all important Git terms (commit, commit SHA, branch, merge, rebase) explained using gitk

- Learn Version Control with Git — freemium ebook from fournova Software (makers for Tower), associated with paid video course

- Learn Enough Git to Be Dangerous – a tutorial on version control with git

- Pro Git — free Git book (CC BY-NC-SA 3.0)

- The Git Community Book — book built by dozens of people in the Git community

- Git Pocket Guide — a short O’Reilly book on Git

- Git Real: Code School — paid training course from Code School

- Git Branching — visual way to learn git branching

- Learn Git in a Month of Lunches — tutorial-based book by Manning Publications

- Git Magic — short book about Git

- Git from the bottom up — great series of articles about Git

- Git-it — app-tutorial on Git

- Learn Git – Codecademy — This course will show you basic workflow and core features, different ways to undo changes or save multiple versions of a project, and how to collaborate with other developers

- Migrating to Git LFS — Use Git LFS on an existing repository to manage large files in a better way

- Learn Git Branching — Interactive way to learn how git branching works

- An Introduction to Git and GitHub by Brian Yu – CS 50 Git Tutorial

- Version Control with Git — The essentials of using the version control system Git

- GitHub & Collaboration — The essentials of working with remote repositories

- How to Use Git and GitHub — Effective use of version control

- Getting Started with Git

- Learn to Manage Code Based Projects and Collaborate Using GitHub

- Introduction to Git by DataCamp

- Become an Expert in Git & GitHub in 4 Hours

- Beginner Tutorial: How to user Git for WordPress development by NICK SCHÄFERHOFF

- WordPress + Git = ♥ – Presenting VersionPress

- A full tutorial on Managing WordPress website with Git, consisting 4 articles by Delicious Brains

- Top 8 Resources: WordPress with Git by PANTHEON

- Keeping WordPress Under “Version” Control with Git by Steve Grunwell

- Introductory guide to Git for WordPress with resources and tools

- 8 Ways to Sync Git Repositories with WordPress

Various tools for daily operations

- GitHub Desktop — Git Client by GitHub. works with GitHub and GitHub Enterprise seamless

- SourceTree — free (in-beer) GUI client. Windows and Mac only

- GitKraken — a cross Git client for Windows, Mac & Linux. Electron based. Free for non-commercial use and paid Pro version is available.

- Tower — a popular non-free Git GUI client. Mac and Windows

- SmartGit — a commercial comprehensive SCM client with Git, SVN, Mercurial. cross-platform (works on Windows, Mac and Linux)

- RabbitVCS — TortoiseSVN inspired graphic tool for version control systems, with Nautilus and Thunar integration

- gitg — a open-source GTK+ GUI client

- git-cola — a cross-platform Git GUI client

- SGit — Git client for Android 4.x

- Ungit — The easiest way to use git. On any platform. Anywhere.

- MyRepos — a tool to manage multiple version control repositories

- awesome-git-addons — lists more than 20 git addons including all available commands

- GitIgnore Collection — collection of gitignore files for various programming language

- git-extras – git utilities adding useful git commands

- git-extra-commands — Another collection of useful git commands

- GitUp — a clean, minimal Git client. Mac only.

- GitExtensions — a shell extension, a Visual Studio 2010-2015 plugin and a standalone Git repository tool.

- Octotree — a must-have browser extension to explore code on GitHub

- Tig – text-mode interface for Git

- Sublime Merge – a cross-platform Git client from the makers of Sublime Text.

- Gitless – an experimental version of Git that changes some of Git’s underlying concepts

- ghq — Organization for remote repositories

- bash-git-prompt – An informative and fancy bash prompt for Git users

Git is designed for source control management. Extensions help people to extend the idea and push version control to everywhere

- Git Large File Storage — practical solution for versioning large files. supported by GitHub

- Git Virtual File System or GVFS — solution for managing very large Git repository while maintaining speed and efficiency of most operations, in development by Microsoft.

- git-annex — allow managing massive binaries among machines, as if operating a standard git repository. Possible to create a synchronized folder with git-annex assistant

- GitLens – A Visual Studio code extension which helps you visualize code authorship at a glance via Git blame annotations and code lens. Allows you to seamlessly explore Git repositories and gain valuable insights via powerful comparison commands.

People have plenty of options to host their source code

- GitHub — the de-facto git hosting service. Perfect integration with most external services.

- GitLab.com — a free Git repository hosting service served by GitLab EE. Unlimited repositories and private collaborators

- BitBucket — well-known for its free private repository (5 user max).

- Kiln — paid Git repository hosting service

- CodePlex — Microsoft’s free open source code hosting service with many ASP/C# OSS projects

- AWS CodeCommit — a SaaS service provided by Amazon Web Service on high availability infrastructure

- Codeplane — a paid Git repository hosting service with no contributor limit

- Deveo — a paid repository hosting service with support for Git, Subversion, Mercurial, WebDAV

- SourceForge — a long-standing free Git repository hosting service

Or you can host the code yourselves

- Gitolite — a simple with fine-grained access control

- GitHub Enterprise — self-hosted solution provided from GitHub

- Bitbucket Server — self-hosted refrom Atlassian. Good integration with JIRA and other Atlassian products

- GitLab CE/EE — a popular open-source Git (CE) with paid support option (EE).

- Upsource — recent offer from Jetbrains, a famos developer-oriented software company. Code repository hosting feature pending. Free for 10 users. Good integration with YouTrack and TeamCity

- GitBucket — a GitHub clone powered by Scala.

- Gogs — a self-hosted Git Service written in Go.

- Gitea – a community managed fork of Gogs

- GitBlit — Pure Java Stack for managing, view, and serving Git repositories.

- Apache Allura — an open source implementation of project hosting platform

- Phabricator — an integrated set of powerful tools to help companies build higher quality software

- RhodeCode CE/EE — a platform delivering enterprise source code management

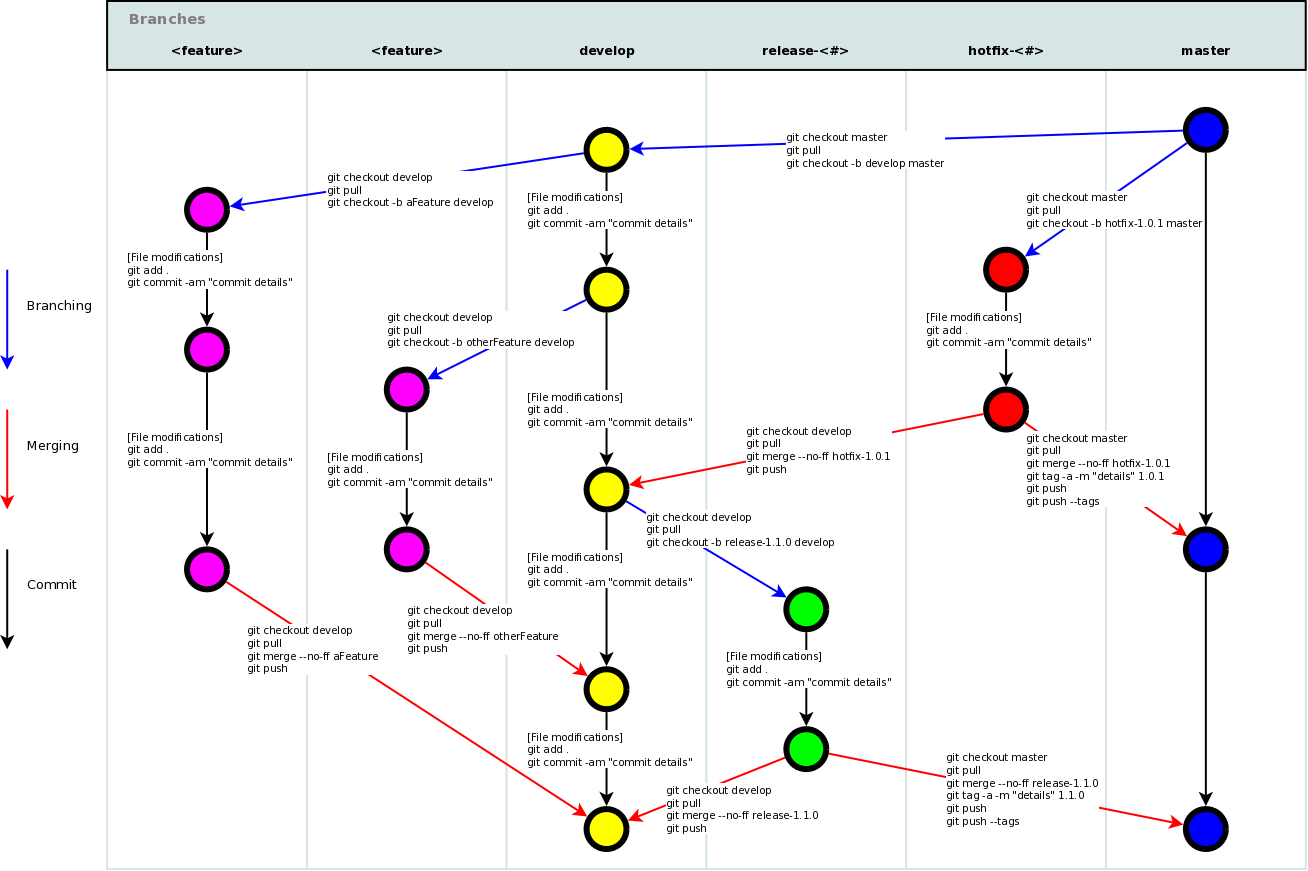

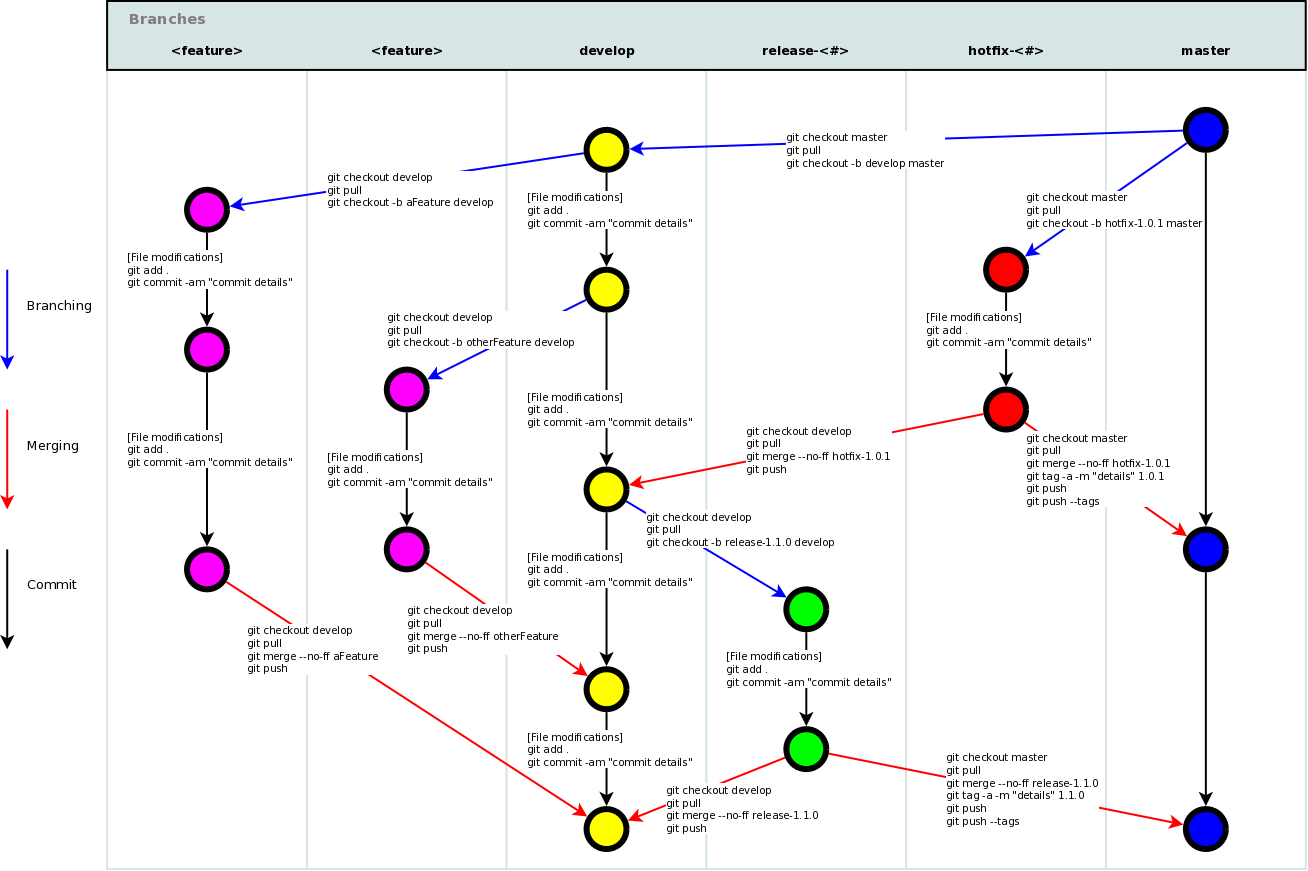

Inexpensive branching allows people adopt workflows other than the classic centralilzed workflow

- Pro Git – Distributed Workflows

- Atlassian Git Tutorial – Comparing Workflows

- Gitflow — the most well-known Git workflow model

- GitHub flow — a simple branching model with a single master

- GitLab flow

- Git DMZ Flow

Git provide hooks at commit/push phrase, allowing integration with and code quality checking tool and Continuous Integration (CI)

- pre-commit — a framework for managing and maintaining multi-language pre-commit hooks from Yelp. Extensive support for multiple programming language.

- Overcommit — a extendable Git hook manager written with Ruby.

- git-hooks — tool to manage project, user, and global Git hooks

- quickhook — a fast, Unix’y, opinionated Git hook runner

- husky – Git hooks for Node.js, manage your hooks from your package.json

A curated list of GitHub’s Resources.

The awesomeness is currently organized into just a few different buckets:

- Infomation for People Who Are New to GitHub

- Resources for Those Already Familiar With GitHub

- Tips, Tricks, Tools, and Add-Ons for GitHub Power Users

- Novel Uses of GitHub

- The Beginner’s Guide to Hacktoberfest 2019 & Winning the T-shirt

- Code School’s Try Git — If you’d like to better understand Git, one of the technologys that makes GitHub possible, this is a great place to start. No GitHub account required.

- Git-it — 💻 🎓 A workshopper for learning Git and GitHub.

- On-Demand GitHub Training — Self-paced, interactive projects created and maintained by GitHub’s own Training team.

- Bingo Board — Play bingo 💥 by sending pull requests!

- Writing on GitHub — GitHub’s own guide to using GitHub for more than just software development.

- GitHubGuides — GitHub Training & Guides on YouTube.

- GitHub Pages — Websites for you and your projects. Hosted directly from your GitHub repository. Just edit, push, and your changes are live.

- Filetypes that GitHub can do magic with:

- GeoJSON/TopoJSON — Instantly render maps when you add a GeoJSON file to a repository.

- iPython/Jupyter — Yes, that’s right, GitHub also renders

ipynbfiles right in the browser. The possibilities are endless. - PDF — View PDFs right in your browser.

- STL files — It’s pretty amazing, 3D right in the browser.

- CSV — Data journalists and civic data nerds rejoice, comma separated values right in the browser!

- SVG — Not only can you view scalable vector graphics in the browser, but you can see the difference between versions visually! You’ve got to see it to believe it. (In fact, you can do this with most image files.)

- PSD — That’s right, same idea as SVG, but for Photoshop files!

- GitHub Government Community — Information on joining GitHub’s government community — a collaborative community for sharing best practices in furtherance of open source, open data, and open government efforts.

- Classroom for GitHub — Your course assignments on GitHub.

- Fun with GitHub — A fun overview of GitHub.

- GitHub Cheat Sheet — Use this list to test your GitHub knowledge.

- GitHub Universe — Two full days on how to build, collaborate, and deploy great software, presented by GitHub. October 1 & 2, 2015, SF.

- GitHub Desktop — Simple collaboration from your desktop.

- Atom — Did you know that GitHub makes an editor? Use it to write, code, and more.

- Electron — Build cross platform desktop apps with web technologies

- GitHub Buttons — Showcase your GitHub repo’s success with hotlinkable GitHub star, fork, or follow buttons.

- Resume — Resumes generated using GitHub.

- Speaker Deck — Share Presentations without the Mess, by GitHub.

- Blocks — This is a simple viewer for code examples hosted on GitHub Gist. (Introduction is here)

- Block Builder — Quickly create, edit, and fork D3.js examples

- GitHub Template Guidelines — Guidelines for creating template files for a GitHub project..

- GitHub Integrations Directory — Use your favorite tools with GitHub.

- GitHub Cheat Sheet — Use this list to test your GitHub knowledge. (A resource so good, it’s worth mentioning twice.)

- A collection of awesome browser extensions for GitHub. — Well, the link kinda’ says it all.

- Gitter — Chat, for GitHub. Unlimited public rooms and one-to-one chats, free.

- ZenHub — Project management inside of GitHub, including kanban boards and more.

- HuBoard — Instant project management for your GitHub issues (sadly, no free plan, but appears to be open source)

- Overv.io — Agile project management for teams who love GitHub. Kanban baords and more.

- Penflip — Collaborative writing and version control, powered by GitLab (similar to GitHub).

- Gitbook — A modern publishing toolchain. Simply taking you from ideas to finished, polished books.

- Prose — Prose provides a beautifully simple content authoring environment for CMS-free websites. It’s a web-based interface for managing content on GitHub.

- Redliner — A tool for facilitating the redlining of documents with the GitHub uninitiated.

- Gatekeeper — Enables client-side applications to dance OAuth with GitHub.

- github-secret-keeper — Microservice to enable GitHub login for multiple server-less applications.

- Hub — A command line tool that wraps git in order to extend it with extra features and commands that make working with GitHub easier.

- Ghizmo — A command line for GitHub, allowing access to all APIs.

- cli-github — GitHub made pretty, within the command line.

- GitHub Dark — A sophisticated dark theme for GitHub.

- github-issues-import — A Python script that allows you to import issues and pull requests from one GitHub repository to another

- Github-Auto-Issue-Creator — A Python script that searches a GitHub repository (locally) and automatically creates GitHub issues for TODO statements, keeping them tracked.

- Problem Child — Allows authenticated or anonymous users to fill out a standard web form to create GitHub issues (and pull requests).

- gitify — All your GitHub notifications on your menu.

- HubPress — A web application to build your Blog on GitHub

- TinyPress — TinyPress is the easiest way to publish a blog on GitHub.

- Issue and Pull Request Template Generator — Generate templates customized to your project, with the help of Cthulhu and Lewis Carroll

- Noteit — Manage your notes at CLI with GitHub Gists.

- Zappr — A free/open-source GitHub integration that removes bottlenecks around pull request approval and helps dev teams to painlessly abide by compliance requirements.

- Migrating to Git LFS — Easily manage huge files in your Git projects, useful for Data Science projects

- Mozilla Festival — Mozfest 2015 Program on GitHub.

- Open Source Society University — Path to a free self-taught education in Computer Science.

- Clef’s Handbook — An employee handbook built for inclusion.

- The United States Code — The complete United States Code.

- Le code civil français sous git — French Civil Code on GitHub.

- Gitron — A web game about GitHub based on Tron movies.

- Using Ansible with DigitalOcean

- Ansible Cloud Modules – DigitalOcean

- Terraform – DigitalOcean Provider

- DigitalOcean in Action! – Example repo for using DigitalOcean with Terraform and Ansible.

- API

- Spaces API

- OAuth

- Droplet Metadata

- Official DO Release Notes – Notes tracking incremental improvements and major releases for the DigitalOcean cloud platform.

- GitHub – Hosting service for Git repositories.

- Cheat Sheet

- Git Cheat Sheet & Git Flow

- Browser Extensions

- Git Tips

- Open Source @ DigitalOcean

- Netbox

- Doctl – Official command-line interface for the DigitalOcean API.

- go-libvirt

- go-qemu

- Learn VSCode — VSCode.pro Power User Course

- Vim

- Sublime Text

- do-sshuttle – Transparent Proxying via sshuttle to DigitalOcean Droplet.

- drophosts – Update

/etc/hostswith peer droplets. - droplan – Manage iptable rules for the private interface on DigitalOcean droplets.

- foreman-digitalocean – Plugin to enable management of DigitalOcean droplets in Foreman.

- lita-digitalocean – Lita handler for managing DigitalOcean services.

- DDNS – Personal DDNS client with DigitalOcean Networking DNS as backend.

- Less Confusing Menus – A Chrome extension that makes account menus less confusing.

- DigitalOcean Droplet creator – A dialog-based shell script to quickly create a single DigitalOcean Droplet.

- do-upgrade-plans – A script to upgrade your DigitalOcean Droplets to better plans with the same cost.

- doctl – Command-line tool for DigitalOcean services.

- digitalocean-indicator – Debian Gnome panel client.

- domanager – Linux and Windows System Tray Client.

- OceanBar – macOS menu bar client.

- Tugboat – Ruby command-line tool for DigitalOcean services, focusing on a more guided UX.

- Building a Kubernetes cluster on DigitalOcean using Kubicorn

- Reddit community

##digitaloceanon freenode- Engineering Code of Conduct

- User Scripts – User data scripts to help provision apps on a Droplet.

- Hacktoberfest – Month-long celebration of open source software in partnership with GitHub.

- Set Up

- Configuration Files

- Create

- Local Changes

- Search

- Commit History

- Branches & Tags

- Update & Publish

- Merge & Rebase

- Undo

- Git Flow

$ git config --list

$ git config --local --list

$ git config --global --list

$ git config --system --list

$ git config --global user.name "[firstname lastname]"

$ git config --global user.email "[valid-email]"

$ git config --global color.ui auto

$ git config --global core.editor vi

<repo>/.git/config

~/.gitconfig

/etc/gitconfig

There are two ways:

Via SSH

$ git clone ssh://user@domain.com/repo.git

Via HTTP

$ git clone http://domain.com/user/repo.git

$ git init

$ git status

$ git diff

$ git add .

$ git add -p <file>

$ git commit -a

$ git commit

$ git commit -m 'message here'

$ git commit -am 'message here'

$ git commit --date="`date --date='n day ago'`" -am "<Commit Message Here>"

Don’t amend published commits!

$ git commit -a --amend

Don’t amend published commits!

$ git commit --amend --no-editGIT_COMMITTER_DATE="date" git commit --amend

$ git commit --amend --date="date"$ git stash

$ git checkout branch2

$ git stash pop

$ git stash apply- {stash_number} can be obtained from

git stash list

$ git stash apply stash@{stash_number}$ git stash drop

$ git grep "Hello"

$ git grep "Hello" v2.5

Show all commits, starting with newest (it’ll show the hash, author information, date of commit and title of the commit):

$ git log

$ git log --oneline

$ git log --author="username"

$ git log -p <file>

$ git log --oneline <origin/master>..<remote/master> --left-right

$ git log --all --decorate --oneline --graph

$ git blame <file>

$ git reflog show

$ git reflog delete

$ git branch

$ git branch -a

$ git branch -r

$ git checkout <branch>

$ git checkout <branch> -- <filename>

$ git checkout -b <branch>

$ git checkout <commit-hash> -b <new_branch_name>

$ git branch <new-branch>

$ git branch --track <new-branch> <remote-branch>

$ git branch -d <branch>

$ git branch -m <new_branch_name>You will lose unmerged changes!

$ git branch -D <branch>

$ git tag <tag-name>

$ git tag -a <tag-name>

$ git remote -v

$ git remote show <remote>

$ git remote add <remote> <url>

$ git fetch <remote>

$ git remote pull <remote> <url>

$ git pull origin master

$ git pull --rebase <remote> <branch>

$ git push remote <remote> <branch>

$ git push <remote> :<branch> (since Git v1.5.0)

OR

$ git push <remote> --delete <branch> (since Git v1.7.0)

$ git push --tags

$ git config --global merge.tool meld$ git mergetool

$ git merge <branch>

$ git merge --no-ff <branch>

Don’t rebase published commit!

$ git rebase <branch>

$ git rebase --abort

$ git rebase --continue

$ git add <resolved-file>

$ git rm <resolved-file>

$ git rebase -i <commit-just-before-first>

Now replace this,

pick <commit_id>

pick <commit_id2>

pick <commit_id3>

to this,

pick <commit_id>

squash <commit_id2>

squash <commit_id3>

$ git reset --hard HEAD

$ git reset HEAD

$ git checkout HEAD <file>

$ git revert <commit>

$ git reset --hard <commit>

$ git reset --hard <remote/branch> e.g., upstream/master, origin/my-feature

$ git reset <commit>

$ git reset --keep <commit>

$ git rm -r --cached .

$ git add .

$ git commit -m "remove xyz file"

Improved Git-flow

$ brew install git-flow-avh

$ port install git-flow

$ sudo apt-get install git-flow

$ wget -q -O - --no-check-certificate https://raw.githubusercontent.com/petervanderdoes/gitflow/develop/contrib/gitflow-installer.sh install <state> | bashGit flow needs to be initialized in order to customize your project setup. Start using git-flow by initializing it inside an existing git repository:

You’ll have to answer a few questions regarding the naming conventions for your branches. It’s recommended to use the default values.

git flow initOR

git flow init -dgit flow feature start MYFEATURE

git flow feature finish MYFEATURE

Are you developing a feature in collaboration? Publish a feature to the remote server so it can be used by other users.

git flow feature publish MYFEATURE

git flow feature pull origin MYFEATURE

8) Now go to that existing project and create a pull request by pressing the compare and pull request button.

git flow feature track MYFEATURE

Support preparation of a new production release. Allow for minor bug fixes and preparing meta-data for a release

To start a release, use the git flow release command. It creates a release branch created from the ‘develop’ branch. You can optionally supply a [BASE] commit sha-1 hash to start the release from. The commit must be on the ‘develop’ branch.

git flow release start RELEASE [BASE]

It’s wise to publish the release branch after creating it to allow release commits by other developers. Do it similar to feature publishing with the command:

git flow release publish RELEASE

git flow release finish RELEASE

Hotfixes arise from the necessity to act immediately upon an undesired state of a live production version. May be branched off from the corresponding tag on the master branch that marks the production version.

$ git flow hotfix start VERSION [BASENAME]

The version argument hereby marks the new hotfix release name. Optionally you can specify a basename to start from.

By finishing a hotfix it gets merged back into develop and master. Additionally the master merge is tagged with the hotfix version

git flow hotfix finish VERSION

$ git squash fixed-cursor-styling "Fixed cursor styling"

$ git squash 95b7c52

$ git squash HEAD~3

$ git summary

project : git

repo age : 10 years

active : 11868 days

commits : 40530

files : 2825

authors :

15401 Junio C Hamano 38.0%

1844 Jeff King 4.5%

$ git line-summary

project : gulp

lines : 3900

authors :

1040 Contra 26.7%

828 Sindre Sorhus 21.2%

$ git effort

file commits active days

.gitattributes............................... 3 3

.gitignore................................... 265 226

.mailmap..................................... 47 40

$ git authors

Contra <contra@maricopa.edu>

Eric Schoffstall <contra@wearefractal.com>

Sindre Sorhus <sindresorhus@gmail.com>

$ git changelog

## 3.9.0

- add babel support

- add transpiler fallback support

- add support for some renamed transpilers (livescript, etc)

- add JSCS

- update dependecies (liftoff, interpret)

- documentation tweaks

## 3.8.11

- fix node 0.12/iojs problems

- add node 0.12 and iojs to travis

- update dependencies (liftoff, v8flags)

- documentation tweaks

$ git commits-since yesterday

... changes since yesterday

TJ Holowaychuk - Fixed readme

$ git count

total 855

$ git create-branch development

Total 3 (delta 0), reused 0 (delta 0)

To https://github.com/tj/git-extras.git

* [new branch] HEAD -> development

Branch development set up to track remote branch development from origin.

Switched to a new branch 'development'

$ git delete-submodule lib/foo

$ git delete-tag v0.1.1

Deleted tag 'v0.1.1' (was 9fde751)

To https://github.com/tj/git-extras.git

- [deleted] v0.1.1

$ git delete-merged-branches

Deleted feature/themes (was c029ab3).

Deleted feature/live_preview (was a81b002).

Deleted feature/dashboard (was 923befa).

$ git fresh-branch docs

Removing .DS_Store

Removing .editorconfig

Removing .gitignore

$ git guilt `git log --until="3 weeks ago" --format="%H" -n 1` HEAD

Paul Schreiber +++++++++++++++++++++++++++++++++++++++++++++(349)

spacewander +++++++++++++++++++++++++++++++++++++++++++++(113)

Mark Eissler ++++++++++++++++++++++++++

$ git merge-into master

Switched to branch 'master'

Your branch is up-to-date with 'origin/master'.

Updating 9fde751..e62edfa

Fast-forward

234 | 0

1 file changed, 0 insertions(+), 0 deletions(-)

create mode 100644 234

Switched to branch 'development'

$ git graft development

Your branch is up-to-date with 'origin/master'.

Merge made by the 'recursive' strategy.

package.json | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

Deleted branch development (was 64b3563).

$ git alias last "cat-file commit HEAD"

$ git alias

last = cat-file commit HEAD

$ git ignore build "*.o" "*.log"

... added 'build'

... added '*.o'

... added '*.log'

$ git info

## Remote URLs:

origin git@github.com:sampleAuthor/git-extras.git (fetch)

origin git@github.com:sampleAuthor/git-extras.git (push)

## Remote Branches:

origin/HEAD -> origin/master

origin/myBranch

## Local Branches:

myBranch

* master

## Most Recent Commit:

commit e3952df2c172c6f3eb533d8d0b1a6c77250769a7

Author: Sample Author <sampleAuthor@gmail.com>

Added git-info command.

Type 'git log' for more commits, or 'git show <commit id>' for full commit details.

## Configuration (.git/config):

color.diff=auto

color.status=auto

$ git fork LearnBoost/expect.js

$ git release 0.1.0

... releasing 0.1.0

On branch development

Your branch is up-to-date with 'origin/development'.

nothing to commit, working directory clean

Total 0 (delta 0), reused 0 (delta 0)

To https://github.com/tj/git-extras.git

9fde751..e62edfa master -> master

Counting objects: 1, done.

Writing objects: 100% (1/1), 166 bytes | 0 bytes/s, done.

Total 1 (delta 0), reused 0 (delta 0)

To https://github.com/tj/git-extras.git

* [new tag] 0.1.0 -> 0.1.0

... complete

$ git contrib visionmedia

visionmedia (18):

Export STATUS_CODES

Replaced several Array.prototype.slice.call() calls with Array.prototype.unshift.call()

Moved help msg to node-repl

$ git repl

git> ls-files

History.md

Makefile

$ git undo

Unstaged changes after reset:

M package.json

M readme.md

$ git gh-pages

$ git scp staging HEAD

$ git setup

Initialized empty Git repository in /GitHub/test/gulp/.git/

[master (root-commit) 9469797] Initial commit

69 files changed, 3900 insertions(+)

create mode 100644 .editorconfig

create mode 100644 .gitignore

create mode 100644 .jscsrc

$ git touch index.js

$ git obliterate secrets.json

Rewrite 2357a4334051a6d1733037406ab7538255030d0b (1/981)rm 'secrets.json'

Rewrite b5f62b2746c23150917d346bd0c50c467f01eb03 (2/981)rm 'secrets.json'

Rewrite 3cd94f3395c2701848f6ff626a0a4f883d8a8433 (3/981)rm 'secrets.json'

$ git feature dependencies

$ git feature finish dependencies

Already up-to-date.

Deleted branch feature/dependencies (was f0fc4c7).

Deleted remote-tracking branch origin/feature/dependencies (was f0fc4c7).

To git@github.com:stevemao/gulp.git

- [deleted] feature/dependencies

$ git local-commits

commit 5f00a3c1bb71876ebdca059fac96b7185dea5467

Merge: 7ad3ef9 841af4e

Author: Blaine Bublitz <blaine@iceddev.com>

Date: Thu Aug 20 11:35:15 2015 -0700

Merge pull request #1211 from JimiHFord/patch-1

Update guidelines.md

commit 841af4ee7aaf55b505354d0e86d7fb876d745e26

Author: Jimi Ford <JimiHFord@users.noreply.github.com>

Date: Thu Aug 20 11:55:38 2015 -0400

Update guidelines.md

fixed typo

$ git archive-file

Building archive on branch "master"

Saved to "gulp.v3.9.0-36-g47cb6b0.zip" ( 60K)

$ git missing master

< d14b8f0 only on current checked out branch

> 97ef387 only on master

$ git lock config/database.yml

$ git locked

config/database.yml

$ git unlock config/database.yml

$ git reset-file README.md HEAD^

Reset 'README.md' to HEAD^

$ git pr 226

From https://github.com/tj/git-extras

* [new ref] refs/pulls/226/head -> pr/226

Switched to branch 'pr/226'

$ git root

/GitHub/git

$ git delta

README.md

$ git merge-repo git@github.com:tj/git-extras.git master .

git fetch git@github.com:tj/git-extras.git master

warning: no common commits

remote: Counting objects: 3507, done.

remote: Compressing objects: 100% (5/5), done.

remote: Total 3507 (delta 1), reused 0 (delta 0), pack-reused 3502

Receiving objects: 100% (3507/3507), 821.12 KiB | 286.00 KiB/s, done.

Resolving deltas: 100% (1986/1986), done.

From github.com:tj/git-extras

* branch master -> FETCH_HEAD

Added dir 'git-merge-repo.E95m0gj'

No local changes to save

$ git psykorebase master

$ git psykorebase --continue

$ git psykorebase master feature

$ git flow init

Which branch should be used for bringing forth production releases?

- changelog

- master

Branch name for production releases: [master]

Which branch should be used for integration of the "next release"?

- changelog

Branch name for "next release" development: [master]

Production and integration branches should differ.

$ git flow feature

$ git flow feature start awesome-feature

$ git flow feature finish awesome-feature

$ git flow feature delete awesome-feature

$ git flow feature publish awesome-feature

$ git flow feature pull remote awesome-feature

$ git flow release

$ git flow release start awesome-release

$ git flow release finish awesome-release

$ git flow release delete awesome-release

$ git flow hotfix

$ git flow hotfix start awesome-release

$ git flow hotfix finish awesome-release

$ git flow hotfix delete awesome-release

$ git flow support

$ git up

Fetching origin

4.0 fast-forwarding...

changelog ahead of upstream

master fast-forwarding...

returning to 4.0

$ git clone schacon/ticgit

> git clone git://github.com/schacon/ticgit.git

$ git clone -p schacon/ticgit

> git clone git@github.com:schacon/ticgit.git

$ git clone resque

> git clone git@github.com/YOUR_USER/resque.git

$ git remote add rtomayko

> git remote add rtomayko git://github.com/rtomayko/CURRENT_REPO.git

$ git remote add -p rtomayko

> git remote add rtomayko git@github.com:rtomayko/CURRENT_REPO.git

$ git remote add origin

> git remote add origin git://github.com/YOUR_USER/CURRENT_REPO.git

$ git fetch mislav

> git remote add mislav git://github.com/mislav/REPO.git

> git fetch mislav

$ git fetch mislav,xoebus

> git remote add mislav ...

> git remote add xoebus ...

> git fetch --multiple mislav xoebus

$ git cherry-pick https://github.com/mislav/REPO/commit/SHA

> git remote add -f --no-tags mislav git://github.com/mislav/REPO.git

> git cherry-pick SHA

$ git cherry-pick mislav@SHA

> git remote add -f --no-tags mislav git://github.com/mislav/CURRENT_REPO.git

> git cherry-pick SHA

$ git cherry-pick mislav@SHA

> git fetch mislav

> git cherry-pick SHA

$ git am https://github.com/github/hub/pull/55

[ downloads patch via API ]

> git am /tmp/55.patch

$ git am --ignore-whitespace https://github.com/davidbalbert/hub/commit/fdb9921

[ downloads patch via API ]

> git am --ignore-whitespace /tmp/fdb9921.patch

$ git apply https://gist.github.com/8da7fb575debd88c54cf

[ downloads patch via API ]

> git apply /tmp/gist-8da7fb575debd88c54cf.txt

$ git fork

[ repo forked on GitHub ]

> git remote add -f YOUR_USER git@github.com:YOUR_USER/CURRENT_REPO.git

$ git pull-request

[ opens text editor to edit title & body for the request ]

[ opened pull request on GitHub for "YOUR_USER:feature" ]

$ git checkout https://github.com/github/hub/pull/73

> git remote add -f --no-tags -t feature mislav git://github.com/mislav/hub.git

> git checkout --track -B mislav-feature mislav/feature

$ git merge https://github.com/github/hub/pull/73

> git fetch git://github.com/mislav/hub.git +refs/heads/feature:refs/remotes/mislav/feature

> git merge mislav/feature --no-ff -m 'Merge pull request #73 from mislav/feature...'

$ git create

[ repo created on GitHub ]

> git remote add origin git@github.com:YOUR_USER/CURRENT_REPO.git

$ git init -g

> git init

> git remote add origin git@github.com:YOUR_USER/REPO.git

$ git push origin,staging,qa bert_timeout

> git push origin bert_timeout

> git push staging bert_timeout

> git push qa bert_timeout

$ git browse

> open https://github.com/YOUR_USER/CURRENT_REPO

$ git compare refactor

> open https://github.com/CURRENT_REPO/compare/refactor

$ git submodule add wycats/bundler vendor/bundler

> git submodule add git://github.com/wycats/bundler.git vendor/bundler

$ git ci-status

success

Collection of

git-tips, want to add your tips? Checkout contributing.md

- git-tip — A handy CLI to make optimum use of these tips. (Here in Docker container)

P.S: All these commands are tested on git version 2.7.4 (Apple Git-66).

- Everyday Git in twenty commands or so

- Show helpful guides that come with Git

- Search change by content

- Sync with remote, overwrite local changes

- List of all files till a commit

- Git reset first commit

- List all the conflicted files

- List of all files changed in a commit

- Unstaged changes since last commit

- Changes staged for commit

- Show both staged and unstaged changes

- List all branches that are already merged into master

- Quickly switch to the previous branch

- Remove branches that have already been merged with master

- List all branches and their upstreams, as well as last commit on branch

- Track upstream branch

- Delete local branch

- Delete remote branch

- Delete local tag

- Delete remote tag

- Undo local changes with the last content in head

- Revert: Undo a commit by creating a new commit

- Reset: Discard commits, advised for private branch

- Reword the previous commit message

- See commit history for just the current branch

- Amend author.

- Reset author, after author has been changed in the global config.

- Changing a remote’s URL

- Get list of all remote references

- Get list of all local and remote branches

- Get only remote branches

- Stage parts of a changed file, instead of the entire file

- Get git bash completion

- What changed since two weeks?

- See all commits made since forking from master

- Pick commits across branches using cherry-pick

- Find out branches containing commit-hash

- Git Aliases

- Saving current state of tracked files without commiting

- Saving current state of unstaged changes to tracked files

- Saving current state including untracked files

- Saving current state with message

- Saving current state of all files (ignored, untracked, and tracked)

- Show list of all saved stashes

- Apply any stash without deleting from the stashed list

- Apply last stashed state and delete it from stashed list

- Delete all stored stashes

- Grab a single file from a stash

- Show all tracked files

- Show all untracked files

- Show all ignored files

- Create new working tree from a repository (git 2.5)

- Create new working tree from HEAD state

- Untrack files without deleting

- Before deleting untracked files/directory, do a dry run to get the list of these files/directories

- Forcefully remove untracked files

- Forcefully remove untracked directory

- Update all the submodules

- Show all commits in the current branch yet to be merged to master

- Rename a branch

- Rebases ‘feature’ to ‘master’ and merges it in to master

- Archive the

masterbranch - Modify previous commit without modifying the commit message

- Prunes references to remote branches that have been deleted in the remote.

- Retrieve the commit hash of the initial revision.

- Visualize the version tree.

- Deploying git tracked subfolder to gh-pages

- Adding a project to repo using subtree

- Get latest changes in your repo for a linked project using subtree

- Export a branch with history to a file.

- Import from a bundle

- Get the name of current branch.

- Ignore one file on commit (e.g. Changelog).

- Stash changes before rebasing

- Fetch pull request by ID to a local branch

- Show the most recent tag on the current branch.

- Show inline word diff.

- Show changes using common diff tools.

- Don’t consider changes for tracked file.

- Undo assume-unchanged.

- Clean the files from

.gitignore. - Restore deleted file.

- Restore file to a specific commit-hash

- Always rebase instead of merge on pull.

- List all the alias and configs.

- Make git case sensitive.

- Add custom editors.

- Auto correct typos.

- Check if the change was a part of a release.

- Dry run. (any command that supports dry-run flag should do.)

- Marks your commit as a fix of a previous commit.

- Squash fixup commits normal commits.

- Skip staging area during commit.

- Interactive staging.

- List ignored files.

- Status of ignored files.

- Commits in Branch1 that are not in Branch2

- List n last commits

- Reuse recorded resolution, record and reuse previous conflicts resolutions.

- Open all conflicted files in an editor.

- Count unpacked number of objects and their disk consumption.

- Prune all unreachable objects from the object database.

- Instantly browse your working repository in gitweb.

- View the GPG signatures in the commit log

- Remove entry in the global config.

- Checkout a new branch without any history

- Extract file from another branch.

- List only the root and merge commits.

- Change previous two commits with an interactive rebase.

- List all branch is WIP

- Find guilty with binary search

- Bypass pre-commit and commit-msg githooks

- List commits and changes to a specific file (even through renaming)

- Clone a single branch

- Create and switch new branch

- Ignore file mode changes on commits

- Turn off git colored terminal output

- Specific color settings

- Show all local branches ordered by recent commits

- Find lines matching the pattern (regex or string) in tracked files

- Clone a shallow copy of a repository

- Search Commit log across all branches for given text

- Get first commit in a branch (from master)

- Unstaging Staged file

- Force push to Remote Repository

- Adding Remote name

- Show the author, time and last revision made to each line of a given file

- Group commits by authors and title

- Forced push but still ensure you don’t overwrite other’s work

- Show how many lines does an author contribute

- Revert: Reverting an entire merge

- Number of commits in a branch

- Alias: git undo

- Add object notes

- Show all the git-notes

- Apply commit from another repository

- Specific fetch reference

- Find common ancestor of two branches

- List unpushed git commits

- Add everything, but whitespace changes

- Edit [local/global] git config

- blame on certain range

- Show a Git logical variable.

- Preformatted patch file.

- Get the repo name.

- logs between date range

- Exclude author from logs

- Generates a summary of pending changes

- List references in a remote repository

- Backup untracked files.

- List all git aliases

- Show git status short

- Checkout a commit prior to a day ago

- Push a new local branch to remote repository and track

git help everydaygit help -ggit log -S'<a term in the source>'git fetch origin && git reset --hard origin/master && git clean -f -dgit ls-tree --name-only -r <commit-ish>git update-ref -d HEADgit diff --name-only --diff-filter=Ugit diff-tree --no-commit-id --name-only -r <commit-ish>git diffgit diff --cachedAlternatives:

git diff --stagedgit diff HEADgit branch --merged mastergit checkout -Alternatives:

git checkout @{-1}git branch --merged master | grep -v '^\*' | xargs -n 1 git branch -dAlternatives:

git branch --merged master | grep -v '^\*\| master' | xargs -n 1 git branch -d # will not delete master if master is not checked outgit branch -vvgit branch -u origin/mybranchgit branch -d <local_branchname>git push origin --delete <remote_branchname>Alternatives:

git push origin :<remote_branchname>git tag -d <tag-name>git push origin :refs/tags/<tag-name>git checkout -- <file_name>git revert <commit-ish>git reset <commit-ish>git commit -v --amendgit cherry -v mastergit commit --amend --author='Author Name <email@address.com>'git commit --amend --reset-author --no-editgit remote set-url origin <URL>git remoteAlternatives:

git remote showgit branch -agit branch -rgit add -pcurl http://git.io/vfhol > ~/.git-completion.bash && echo '[ -f ~/.git-completion.bash ] && . ~/.git-completion.bash' >> ~/.bashrcgit log --no-merges --raw --since='2 weeks ago'Alternatives:

git whatchanged --since='2 weeks ago'git log --no-merges --stat --reverse master..git checkout <branch-name> && git cherry-pick <commit-ish>git branch -a --contains <commit-ish>Alternatives:

git branch --contains <commit-ish>git config --global alias.<handle> <command>

git config --global alias.st statusgit stashAlternatives:

git stash savegit stash -kAlternatives:

git stash --keep-indexgit stash save --keep-indexgit stash -uAlternatives:

git stash save -ugit stash save --include-untrackedgit stash save <message>git stash -aAlternatives:

git stash --allgit stash save --allgit stash listgit stash apply <stash@{n}>git stash popAlternatives:

git stash apply stash@{0} && git stash drop stash@{0}git stash clearAlternatives:

git stash drop <stash@{n}>git checkout <stash@{n}> -- <file_path>Alternatives:

git checkout stash@{0} -- <file_path>git ls-files -tgit ls-files --othersgit ls-files --others -i --exclude-standardgit worktree add -b <branch-name> <path> <start-point>git worktree add --detach <path> HEADgit rm --cached <file_path>Alternatives:

git rm --cached -r <directory_path>git clean -ngit clean -fgit clean -f -dgit submodule foreach git pullAlternatives:

git submodule update --init --recursivegit submodule update --remotegit cherry -v masterAlternatives:

git cherry -v master <branch-to-be-merged>git branch -m <new-branch-name>Alternatives:

git branch -m [<old-branch-name>] <new-branch-name>git rebase master feature && git checkout master && git merge -git archive master --format=zip --output=master.zipgit add --all && git commit --amend --no-editgit fetch -pAlternatives:

git remote prune origin git rev-list --reverse HEAD | head -1Alternatives:

git rev-list --max-parents=0 HEADgit log --pretty=oneline | tail -1 | cut -c 1-40git log --pretty=oneline --reverse | head -1 | cut -c 1-40git log --pretty=oneline --graph --decorate --allAlternatives:

gitk --allgit subtree push --prefix subfolder_name origin gh-pagesgit subtree add --prefix=<directory_name>/<project_name> --squash git@github.com:<username>/<project_name>.git mastergit subtree pull --prefix=<directory_name>/<project_name> --squash git@github.com:<username>/<project_name>.git mastergit bundle create <file> <branch-name>git clone repo.bundle <repo-dir> -b <branch-name>git rev-parse --abbrev-ref HEADgit update-index --assume-unchanged Changelog; git commit -a; git update-index --no-assume-unchanged Changeloggit rebase --autostashgit fetch origin pull/<id>/head:<branch-name>Alternatives:

git pull origin pull/<id>/head:<branch-name>git describe --tags --abbrev=0git diff --word-diffgit difftool -t <commit1> <commit2> <path>git update-index --assume-unchanged <file_name>git update-index --no-assume-unchanged <file_name>git clean -X -fgit checkout <deleting_commit>^ -- <file_path>git checkout <commit-ish> -- <file_path>git config --global pull.rebase trueAlternatives:

#git < 1.7.9

git config --global branch.autosetuprebase alwaysgit config --listgit config --global core.ignorecase falsegit config --global core.editor '$EDITOR'git config --global help.autocorrect 1git name-rev --name-only <SHA-1>git clean -fd --dry-rungit commit --fixup <SHA-1>git rebase -i --autosquashgit commit --only <file_path>git add -igit check-ignore *git status --ignoredgit log Branch1 ^Branch2git log -<n>Alternatives:

git log -n <n>git config --global rerere.enabled 1git diff --name-only | uniq | xargs $EDITORgit count-objects --human-readablegit gc --prune=now --aggressivegit instaweb [--local] [--httpd=<httpd>] [--port=<port>] [--browser=<browser>]git log --show-signaturegit config --global --unset <entry-name>git checkout --orphan <branch_name>git show <branch_name>:<file_name>git log --first-parentgit rebase --interactive HEAD~2git checkout master && git branch --no-mergedgit bisect start # Search start

git bisect bad # Set point to bad commit

git bisect good v2.6.13-rc2 # Set point to good commit|tag

git bisect bad # Say current state is bad

git bisect good # Say current state is good

git bisect reset # Finish search

git commit --no-verifygit log --follow -p -- <file_path>git clone -b <branch-name> --single-branch https://github.com/user/repo.gitgit checkout -b <branch-name>Alternatives:

git branch <branch-name> && git checkout <branch-name>git config core.fileMode falsegit config --global color.ui falsegit config --global <specific command e.g branch, diff> <true, false or always>git for-each-ref --sort=-committerdate --format='%(refname:short)' refs/heads/git grep --heading --line-number 'foo bar'git clone https://github.com/user/repo.git --depth 1git log --all --grep='<given-text>'git log master..<branch-name> --oneline | tail -1git reset --soft HEADgit reset HEAD <file-name>git push -f <remote-name> <branch-name>git remote add <remote-nickname> <remote-url>git blame <file-name>git shortloggit push --force-with-lease <remote-name> <branch-name>Alternatives:

git revert -m 1 <commit-ish>git rev-list --count <branch-name>git config --global alias.undo '!f() { git reset --hard $(git rev-parse --abbrev-ref HEAD)@{${1-1}}; }; f'git notes add -m 'Note on the previous commit....'git log --show-notes='*'git --git-dir=<source-dir>/.git format-patch -k -1 --stdout <SHA1> | git am -3 -kgit fetch origin master:refs/remotes/origin/mymasterdiff -u <(git rev-list --first-parent BranchA) <(git rev-list --first-parent BranchB) | sed -ne 's/^ //p' | head -1git log --branches --not --remotesAlternatives:

git log @{u}..git cherry -vgit diff --ignore-all-space | git apply --cachedgit config [--global] --editgit blame -L <start>,<end>git var -l | <variable>git format-patch -M upstream..topicgit rev-parse --show-toplevelgit log --since='FEB 1 2017' --until='FEB 14 2017'git log --perl-regexp --author='^((?!excluded-author-regex).*)

git request-pull v1.0 https://git.ko.xz/project master:for-linusgit ls-remote git://git.kernel.org/pub/scm/git/git.gitgit ls-files --others -i --exclude-standard | xargs zip untracked.zipgit config -l | grep alias | sed 's/^alias\.//g'Alternatives:

git config -l | grep alias | cut -d '.' -f 2git status --short --branchgit checkout master@{yesterday}git push -u origin <branch_name>Make sure you follow the following simple set of rules here while trying to contribute.

- Every Pull Request must have a title.

- Every Pull Request must have a description.

- Write the title and description of what you have done in the imperative mode, that is as if you were commanding someone.

- ✅ DO: Start the line with “FIX”, “ADD”, “IMPROVE”.

- ❌ DON’T: Start with “Fixed”, “Added”, “Adding”, “Improved”.

- 🎯 Read → How to Write a Git Commit Message!

- Don’t end the summary line with a period – it’s a title and titles don’t end with a period.

- Have fun.

This open source project is maintained by the help of awesome businesses listed below. What? Read more about it →

|

MIT © Ahmad Awais

| ahmadawais | wpcontentstudio | worwox | driftikharahmad | ahmadbilalme | finktanks |

| mrbilloo | wpbizreview | NidaBatool | usmanworwox | TheWPCouple | a2podcast |

| nighatiftikhar | MahamBatool | TheOpenDeveloper | WPMetaList | freakify | finktanksceo |

| TheDevCouple | ahmedzerar | alexruf | mrumair | AliRaza1844 | AdnanMuhib |

| arximughal | prubianes | saqibameen | Elias504 | Endless7188 | shoaibahmedqureshi |

| GayathriVenkatesh | jamezrin | julicheng | WisdomSky | kkdroidgit | luisfmelo |

| k0R73z | michaelwright74 | green-leaves | omerl13 | Omkar-Ajnadkar | petrkle |

| starchow | darpr | vyaspranjal33 | RajithaFernando | rchung95 | riacheruvu |

| MarkisDev | Burnett2k | SnehPandya18 | sudaisasif | saayv1 | WajahatAnwar |

| ybaruchel | alljp | kuwali | Smith-erin |

For anything else, tweet at @MrAhmadAwais

I have released a video course to help you become a better developer — Become a VSCode Power User →

VSCode

https://github.com/ade-dayo/HacktoberProject

https://github.com/ade-dayo/HacktoberProject